THE DIGITAL WHISPER

In boardrooms and tech conferences, in casual conversations and strategic documents, two terms are often used interchangeably: Artificial Intelligence (AI) and Machine Learning (ML). They are frequently uttered in the same breath, as if synonyms, conjuring images of futuristic robots, self-driving cars, and algorithms that can predict our every desire. While closely related and often interdependent, treating them as identical can lead to significant strategic missteps, misallocated resources, and ultimately, failed projects.

As a digital architect who has spent over a decade navigating the intricate landscapes of advanced technology implementation, I’ve seen this confusion play out repeatedly. Businesses invest in “AI solutions” only to find they’ve acquired a sophisticated ML model that doesn’t solve their broader “intelligent automation” needs. Or conversely, they seek a generalized “AI” when a focused “ML” application is precisely what’s required. Understanding the nuanced difference between AI and Machine Learning is not merely an academic exercise; it’s a foundational prerequisite for any organization aiming to harness these powerful technologies effectively. This article will unravel the core distinction, offering original insights and a strategic framework to guide your journey from conceptual understanding to practical, value-driven implementation.

DEFINITIONS AND RELATIONSHIPS

To truly grasp the strategic implications, we must first dissect the fundamental definitions of Artificial Intelligence and Machine Learning, and understand their hierarchical relationship.

Artificial Intelligence (AI): The Grand Vision

At its broadest, Artificial Intelligence (AI) is a comprehensive field of computer science. Its goal is to create machines that can perform tasks traditionally requiring human intelligence. Think of AI as the overarching concept, the grand ambition to build “smart” machines. These tasks include problem-solving, learning, decision-making, understanding language, recognizing patterns, and even mimicking human creativity. AI encompasses a vast array of techniques and methodologies, not all of which involve learning from data. Early AI systems, for instance, relied heavily on rule-based programming and expert systems, where human knowledge was explicitly coded into the machine.

Machine Learning (ML): The Learning Engine Within AI

Machine Learning (ML) is a specific subset of Artificial Intelligence. It focuses on enabling systems to learn from data without being explicitly programmed. Instead of hard-coding rules, ML algorithms use statistical techniques to identify patterns in large datasets. This allows them to make predictions or decisions based on new, unseen data. ML is the engine that powers many of today’s most impressive AI applications. If AI is the brain, ML is the part of the brain that learns and adapts.

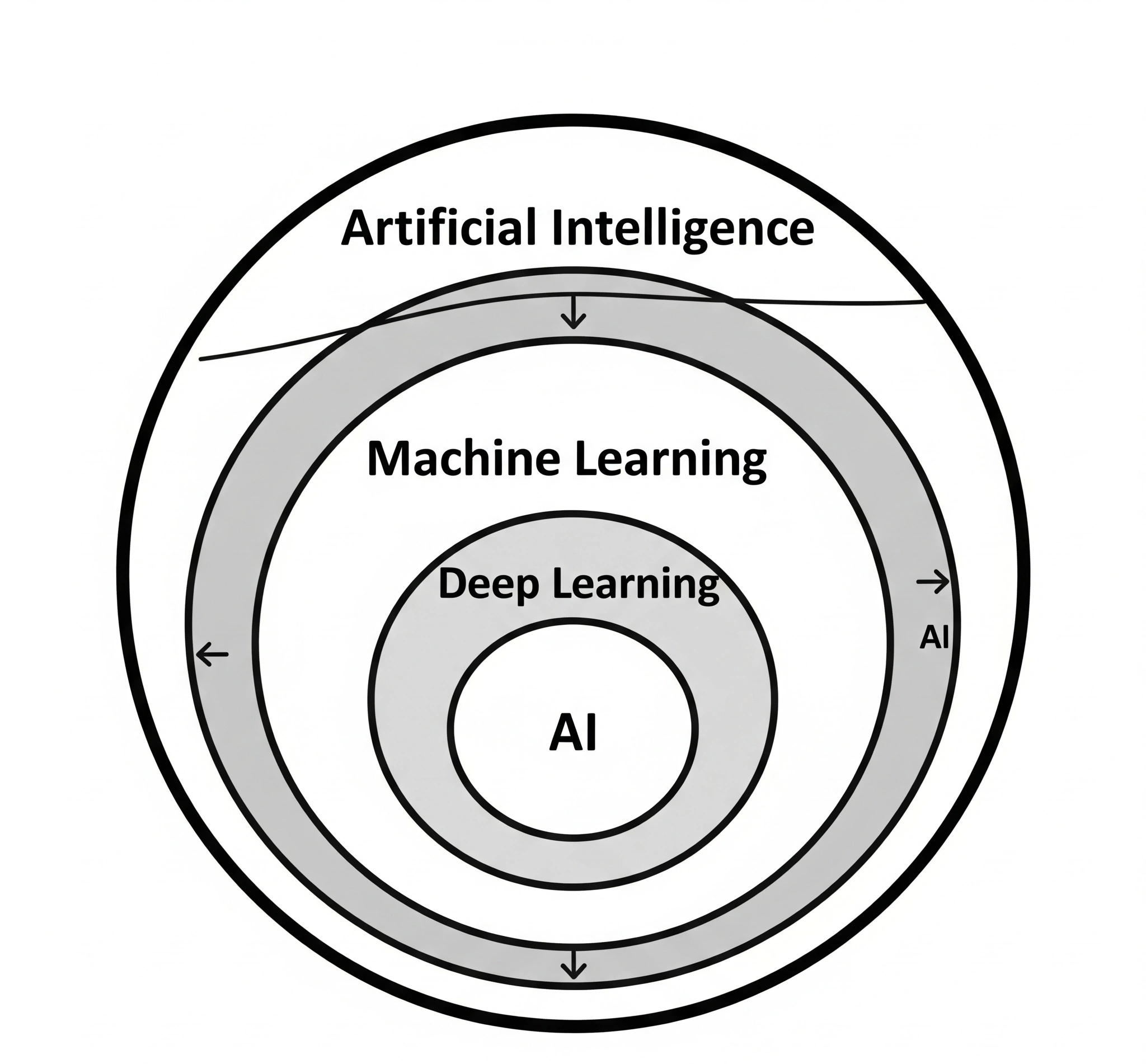

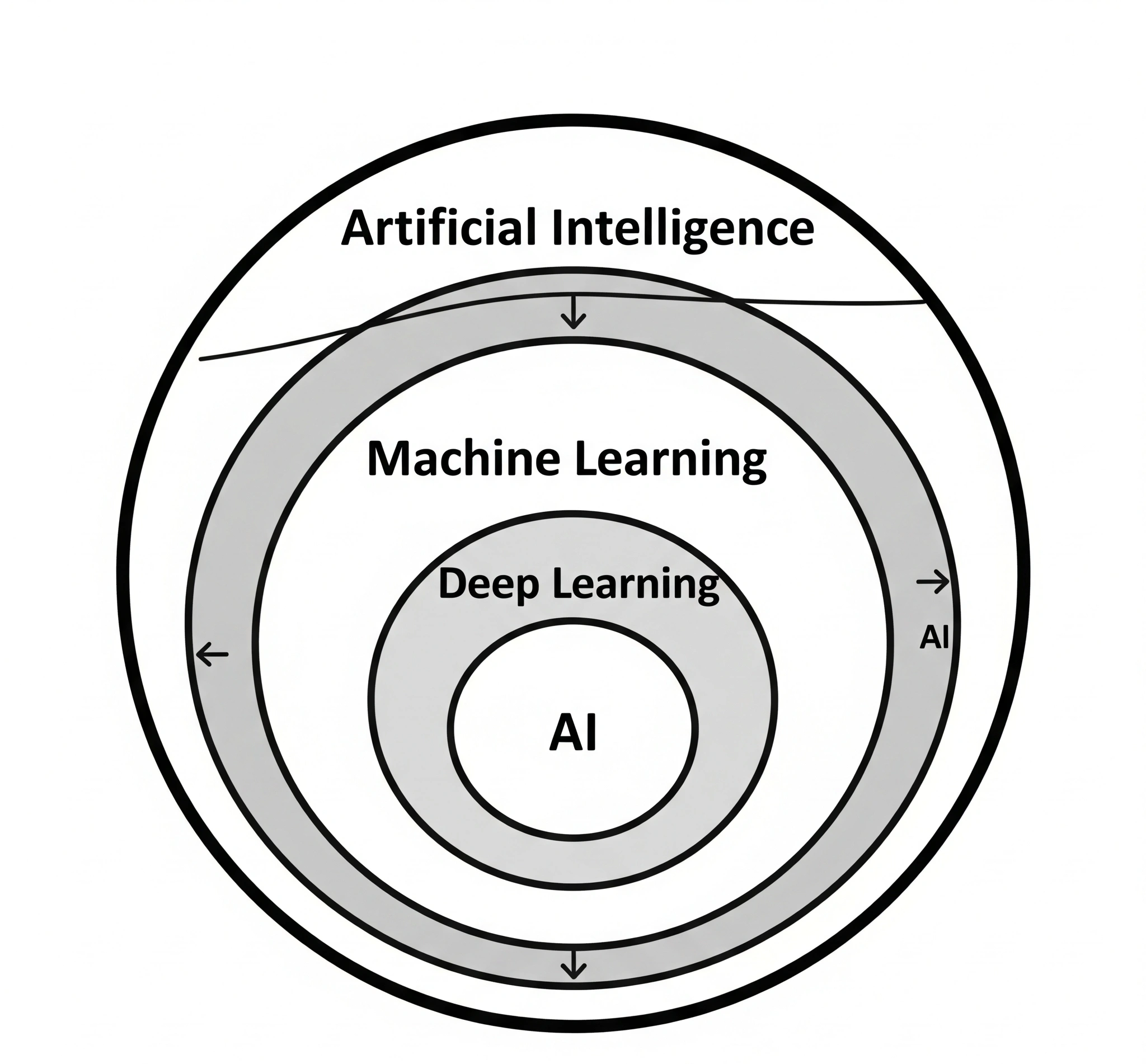

The relationship can be visualized as concentric circles: AI is the largest circle, encompassing the entire field of intelligent machines. Machine Learning is a significant, but smaller, circle contained within AI. Further, Deep Learning (DL) is a specialized subset of Machine Learning, inspired by the structure and function of the human brain (neural networks), particularly effective for complex pattern recognition in images, speech, and text.

WHERE THEY FIT IN PRACTICE

Understanding the theoretical distinction is one thing; applying it in the real world is another. The ecosystem of AI and ML implementation reveals how these concepts manifest in practical applications and why their precise definition matters for project success.

AI in the Broader Context: The “Intelligent” Goal

AI, as the broader field, drives the development of systems that exhibit various forms of intelligence. This includes:

- Artificial Narrow Intelligence (ANI): Also known as “Weak AI,” ANI systems are designed and trained for a particular task. Examples include virtual assistants (like Siri or Alexa), recommendation engines, spam filters, and image recognition software. Most of the AI we interact with today falls into this category.

- Artificial General Intelligence (AGI): Also known as “Strong AI,” AGI refers to a hypothetical AI that can understand, learn, and apply intelligence to any intellectual task that a human being can. This is still largely in the realm of research and science fiction.

- Artificial Superintelligence (ASI): A hypothetical AI that would surpass human intelligence in virtually every field, including scientific creativity, general wisdom, and social skills.

When a business talks about “AI transformation,” they are often envisioning a future where multiple intelligent systems, potentially leveraging various AI techniques (including ML), work together to achieve complex, human-like goals.

ML as the Core Driver: Learning from Data

Machine Learning provides the adaptive capabilities for many AI applications. Different types of ML algorithms are suited for different learning tasks:

- Supervised Learning: Algorithms learn from labeled data (input-output pairs) to make predictions. Used in spam detection, fraud detection, and medical diagnosis.

- Unsupervised Learning: Algorithms find patterns and structures in unlabeled data. Used in customer segmentation, anomaly detection, and data compression.

- Reinforcement Learning: Algorithms learn to make decisions by performing actions in an environment and receiving rewards or penalties. Used in robotics, game playing (e.g., AlphaGo), and autonomous systems.

Beyond these core types, ML also underpins other specialized AI fields:

- Natural Language Processing (NLP): Enables computers to understand, interpret, and generate human language (e.g., sentiment analysis, chatbots).

- Computer Vision: Enables computers to “see” and interpret visual information from images and videos (e.g., facial recognition, object detection).

In practice, a company might use an ML algorithm (e.g., a neural network) for a computer vision task (an AI field) to enable an autonomous vehicle (a broader AI system) to detect pedestrians. The distinction helps in scoping projects: are we building a learning *component* (ML) or an overarching *intelligent system* (AI)?

PROOF OF EXPERIENCE

Let me share a real-world scenario, anonymized and generalized, that perfectly illustrates the confusion between AI and ML and its impact on project execution. We’ll call this “The Customer Service Bot Initiative.”

The Initial Misconception: “AI” as a Magic Bullet

A large telecommunications company, let’s call them “ConnectCo,” decided to launch a major “AI-powered customer service bot” to handle common inquiries, reduce call center volume, and improve customer satisfaction. The executive team, driven by industry buzz, mandated an “AI solution.”

The project began with a broad, somewhat vague directive: “Implement AI to revolutionize customer service.” The internal project team, comprising IT and business stakeholders, interpreted “AI” as a single, all-encompassing solution that would magically understand customer intent, resolve complex issues, and even empathize. They selected a vendor known for its “AI platform” with strong Natural Language Processing (NLP) capabilities.

The vendor’s platform was indeed powerful, excelling at understanding sentiment and extracting entities from text. Their data scientists quickly trained an ML model on millions of past customer interactions. The model could accurately classify customer intent (e.g., “billing inquiry,” “technical support,” “service upgrade”). It could even route inquiries to the correct department.

However, after six months, the bot’s performance was underwhelming. Customers were frustrated. They often had to re-explain issues or were routed incorrectly. The bot could understand *what* the customer was saying (an ML/NLP capability), but it struggled to *solve* anything beyond the most basic, pre-defined questions. It lacked “common sense” and the ability to handle multi-turn conversations or infer context that wasn’t explicitly stated.

The Unraveling: A Mismatch of Expectations

When I was brought in to assess the stalled project, the core problem became evident: ConnectCo had invested in a sophisticated Machine Learning solution (specifically, an NLP model for intent recognition and routing) but had Artificial Intelligence expectations.

The ML model was doing its job perfectly: learning patterns from data to classify and route. But true “AI-powered customer service” (as the executives envisioned) required more than just ML. It needed:

- Knowledge Representation: A structured way for the bot to access and reason with ConnectCo’s vast knowledge base (FAQs, troubleshooting guides, product manuals). This is a classical AI problem, not purely ML.

- Reasoning and Planning: The ability to infer solutions from incomplete information or plan a series of actions to resolve a customer’s issue. Again, a broader AI capability, often involving symbolic AI or expert systems, not just pattern recognition from data.

- Context Management: Remembering previous turns in a conversation and applying that context to subsequent queries, which is challenging for many pure ML models without explicit state management.

- Integration with Backend Systems: The ability to actually *perform* actions like checking account balances, initiating service changes, or scheduling technician visits. This requires robust integration, often beyond the scope of a pure ML model.

The screenshot below illustrates the disconnect. The left side shows the ML model’s excellent performance in classifying intent. The right side, however, shows the bot’s inability to *act* intelligently or engage in a meaningful dialogue, leading to customer frustration. The “AI” label had led to an overestimation of the ML component’s capabilities.

The Resolution: Redefining “AI” with “ML” Precision

Our resolution involved clarifying the scope. We explained that the existing ML model was a powerful *component* of an AI system. To achieve true “AI-powered customer service,” ConnectCo needed to build additional AI layers around that ML core. This included:

- Implementing a Knowledge Graph: A structured database of company knowledge for the bot to query and reason with.

- Developing Rule-Based Logic: For handling common, deterministic processes that didn’t require learning.

- Enhancing Integration: Building robust APIs to allow the bot to interact with billing, service, and scheduling systems.

- Phased Rollout: Starting with the ML-powered intent recognition and routing, then gradually adding more complex AI capabilities.

By understanding that ML is a powerful *tool* within the broader AI toolbox, ConnectCo could reset expectations, reallocate resources, and build a truly intelligent customer service solution incrementally.

ORIGINAL INSIGHT

The core insight from ConnectCo’s “Customer Service Bot Initiative,” and countless similar projects, is this: The persistent conflation of “Artificial Intelligence” and “Machine Learning” is not merely a semantic error; it is a strategic liability that consistently leads to misaligned expectations, scope creep, budget overruns, and project failures.

The Dangerous Gap: Aspiration vs. Reality

The “open code” moment reveals that this confusion creates a dangerous gap between aspiration and reality. When stakeholders use “AI” as a catch-all term for any advanced technological capability, they implicitly assume a level of generalized intelligence, adaptability, and problem-solving that most current systems (which are predominantly ML-based) simply do not possess.

Critical Pitfalls of Mislabeling

This mislabeling leads to several critical pitfalls:

- Unrealistic Expectations: Believing an ML model can “think” or “reason” like a human, when it is designed only to find patterns in data.

- Scope Bloat: Adding features to a project that require true AI capabilities (like complex reasoning or common sense) when only ML components are being developed.

- Resource Misallocation: Investing heavily in data scientists and ML engineers when the core problem requires AI specialists in knowledge representation, symbolic AI, or systems integration.

- Disillusionment and “AI Fatigue”: When projects fail to deliver on exaggerated “AI” promises, organizations become skeptical of the technology’s true potential, hindering future innovation.

The distinction matters because it forces precision in problem definition. Are you trying to build a system that *learns from data to make predictions* (ML)? Or are you trying to build a system that *mimics human-like intelligence across various cognitive functions* (AI)? The former is a well-defined, achievable goal for many businesses today. The latter is a much broader, more complex, and often multi-faceted endeavor that may require combining ML with other AI techniques. Clarity here is the bedrock of successful AI/ML strategy.

ADAPTIVE ACTION FRAMEWORK FOR AI/ML STRATEGY

To effectively navigate the landscape of AI and Machine Learning, I propose an Adaptive Action Framework. This framework emphasizes clarity, strategic alignment, and realistic expectation setting, ensuring your projects deliver tangible value.

1. Define the Problem, Not Just the Technology (Problem-First Approach):

- Action: Before even thinking “AI” or “ML,” precisely define the business problem. What specific task needs to be automated or made more intelligent? What are the measurable outcomes?

- Benefit: Focuses efforts on solving real business challenges, preventing technology for technology’s sake.

2. Deconstruct the “Intelligence” Required (Capability Mapping):

- Action: Analyze the problem’s intelligence needs. Does it require learning from data to predict (ML)? Does it need understanding language (NLP, an ML application)? Or does it require reasoning, planning, or general knowledge (broader AI)?

- Benefit: Helps identify whether a pure ML solution suffices or if a more comprehensive AI approach, potentially combining ML with other techniques, is necessary.

3. Assess Data Readiness (ML Prerequisite):

- Action: If ML is identified as a core component, rigorously assess your data. Is it sufficient, clean, and relevant for training? Do you have the infrastructure to manage it?

- Benefit: Ensures the foundational element for ML success is in place, avoiding “garbage in, garbage out” scenarios.

4. Build Incrementally, Think Holistically (Phased Implementation):

- Action: Start with an ML-focused pilot if appropriate, demonstrating quick wins. Then, incrementally add broader AI capabilities (e.g., knowledge bases, reasoning engines) as needed, integrating them into a holistic intelligent system.

- Benefit: Manages complexity, reduces risk, and allows for continuous validation of value at each stage.

5. Cultivate a Hybrid Talent Pool (Cross-Functional Expertise):

- Action: Foster collaboration between data scientists (ML experts), software engineers (integration), and domain experts (business context). Consider bringing in AI architects who understand both ML and broader AI paradigms.

- Benefit: Bridges the technical and business divide, ensuring solutions are both technically sound and operationally relevant.

6. Manage Expectations Through Clear Communication (Stakeholder Alignment):

- Action: Educate stakeholders on the precise capabilities and limitations of ML vs. broader AI. Use clear, unambiguous language to define project scope and expected outcomes.

- Benefit: Prevents disillusionment, builds trust, and ensures all parties are aligned on what the technology can and cannot do.

By applying this framework, organizations can move beyond the buzzwords. They can build AI and ML strategies that are precise, practical, and truly transformative.

VISION FORWARD & AUTHOR BIO

The journey into Artificial Intelligence and Machine Learning is not a sprint, but a marathon of continuous learning and strategic adaptation. The distinction between AI as the expansive vision of intelligent machines and ML as the powerful learning engine within it is more than academic; it is a critical lens through which organizations must view their digital future. By embracing clarity in definition, precision in problem-solving, and a holistic approach to implementation, businesses can avoid common pitfalls and unlock the immense, truly transformative potential that these technologies promise. The future belongs to those who understand not just what AI and ML *are*, but what they *can realistically do* for their unique challenges.